The integration of artificial intelligence with 3D modeling represents one of the most exciting developments in contemporary filmmaking. As generative AI continues to evolve, Runway ML has emerged as a powerful platform that’s transforming how filmmakers approach 3D assets in their productions. This convergence of technologies is opening up new creative possibilities while simultaneously addressing longstanding challenges in the 3D production pipeline.

Understanding the 3D Revolution in Runway

Runway ML has significantly expanded its capabilities beyond 2D image generation and video synthesis to include robust 3D modeling features. These tools are changing how filmmakers conceptualize, create, and integrate three-dimensional elements in their work. Let’s explore the fundamental capabilities that make Runway’s 3D offerings so valuable to filmmakers:

Text-to-3D Generation

One of Runway’s most transformative features is its ability to generate 3D models directly from text descriptions. This capability allows filmmakers to quickly materialize conceptual ideas without requiring specialized 3D modeling expertise. By simply describing an object, character, or environment in natural language, directors can now produce initial 3D assets that would have previously required days or weeks of work from specialized 3D artists.

For example, independent filmmaker Sarah Chen was able to generate the alien artifacts central to her short film “Remnants” using Runway’s text-to-3D capabilities. By crafting detailed textual descriptions that specified the artifacts’ organic-mechanical hybrid nature, weathered surface textures, and unusual geometric properties, Chen created unique objects that perfectly matched her artistic vision without needing to hire a specialized 3D modeling team.

Image-to-3D Conversion

Runway also excels at transforming 2D images into 3D models – a process that traditionally required extensive manual reconstruction. This feature enables filmmakers to create 3D assets from reference photographs, concept art, or even Midjourney-generated images. The resulting 3D models maintain the visual characteristics of the original images while gaining volumetric properties that allow them to be viewed and manipulated from any angle.

Documentary filmmaker Miguel Hernandez leveraged this capability in his short film “Forgotten Spaces,” which explored abandoned industrial sites. By photographing architectural elements from these locations and converting them to 3D models using Runway, Hernandez was able to create virtual environments that allowed viewers to explore these spaces from perspectives that would have been physically impossible to capture with traditional filming methods.

Neural Rendering and Material Generation

Beyond basic modeling, Runway offers sophisticated neural rendering capabilities that automatically generate realistic materials, textures, and lighting properties for 3D objects. This feature addresses one of the most time-consuming aspects of traditional 3D workflows – the creation of convincing surface properties that respond realistically to light.

Animator Priya Patel utilized this feature extensively in her short “The Clockmaker,” where intricate mechanical objects needed to display various metal finishes, from polished brass to tarnished steel. Rather than manually texturing each component, Patel used Runway’s neural rendering to generate physically accurate materials that responded realistically to the lighting conditions in each scene, creating a level of visual richness that would have been prohibitively time-consuming using conventional methods.

The Midjourney-Runway 3D Pipeline

Many innovative filmmakers are developing workflows that combine Midjourney’s exceptional image generation capabilities with Runway’s 3D tools. This integrated approach leverages the strengths of both platforms to create a more comprehensive asset creation pipeline:

Concept Visualization with Midjourney

The process typically begins with Midjourney, where filmmakers generate detailed visual concepts through carefully crafted prompts. These images serve as the foundational visual reference for characters, environments, props, and other elements that will eventually become 3D assets.

Filmmaker Jordan Lee used this approach for his fantasy short “The Cartographer’s Daughter.” Lee began by generating dozens of detailed Midjourney images depicting the film’s magical mapping tools, fantastical landscapes, and character designs. These images established a consistent visual language for the project and served as the conceptual foundation for all subsequent 3D work.

Translation to 3D via Runway

Once the visual direction is established through Midjourney images, these references are brought into Runway for 3D conversion. Filmmakers can either use Runway’s image-to-3D capabilities directly on the Midjourney outputs or use the images as reference while crafting text prompts for Runway’s text-to-3D generation.

Continuing with Lee’s workflow, he imported his key Midjourney concept images into Runway and used the platform’s image-to-3D conversion to create initial 3D models of the most important elements. For more complex structures or characters that required greater specificity, Lee crafted detailed text descriptions that referenced the visual characteristics established in the Midjourney images while adding information about volumetric properties that couldn’t be inferred from 2D references.

Refinement and Integration

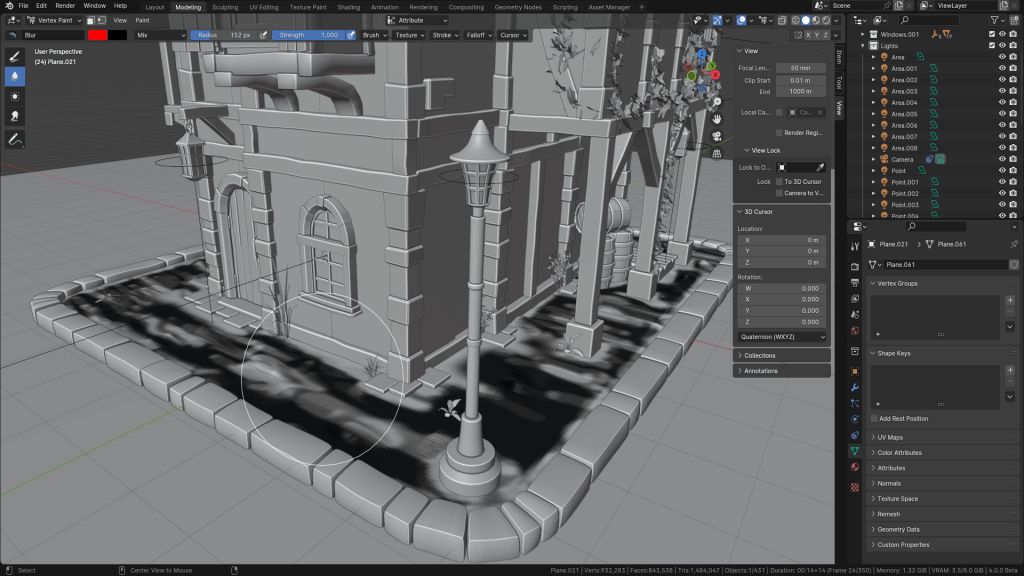

After generating the initial 3D assets, filmmakers typically refine these models through a combination of AI-assisted tools and traditional 3D software. Runway offers various modification capabilities, while the exported models can also be further developed in programs like Blender, Maya, or Cinema 4D.

Lee exported his Runway-generated assets to Blender for final refinements, animation rigging, and integration into his scenes. The AI-generated models provided approximately 70-80% of the final asset quality, significantly reducing the time required for traditional modeling while still allowing for precise artistic control in the final stages of production.

Practical Applications in Short Film Production

The integration of Runway’s 3D capabilities into filmmaking workflows is creating new production methodologies at every stage of the creative process:

Pre-Visualization and Planning

Directors are increasingly using Runway’s 3D tools to create detailed pre-visualizations of complex scenes. By quickly generating 3D environments and characters, filmmakers can explore camera movements, lighting setups, and blocking arrangements before committing to specific production approaches.

Director Alex Winters used this methodology extensively when planning his science fiction short “Orbital Decay.” By generating 3D models of his spacecraft interiors, space station environments, and key props, Winters was able to create detailed animatics that guided his physical set construction and helped communicate his vision to the production design team, resulting in significant cost savings and more efficient use of limited resources.

Virtual Production Integration

Runway-generated 3D assets are proving particularly valuable in virtual production environments, where they can be integrated with game engines like Unreal Engine to create interactive digital backdrops for LED volume filming.

Independent filmmaker Tasha Reynolds combined Runway-generated 3D environments with an affordable LED setup for her short film “Meridian.” By projecting Runway-created 3D landscapes onto LED panels, Reynolds achieved the visual scope of locations that would have been impossible to physically visit with her limited budget, while still capturing in-camera effects like realistic lighting on her actors.

VFX Enhancement and Replacement

Filmmakers are also using Runway’s 3D capabilities to generate specific visual effects elements or to replace placeholder objects in post-production.

Director Carlos Fuentes shot his science fiction short “Convergence” using simple geometric stand-ins for the film’s robotic characters. In post-production, he used Runway to generate detailed 3D models of the robots, which were then tracked and composited into the original footage. This approach allowed for organic interactions between human actors and what would eventually become complex 3D elements, without requiring a full CGI pipeline during production.

Success Stories and Case Studies

Several filmmakers have achieved remarkable results by incorporating Runway’s 3D tools into their production workflows:

“Ephemeral” by Naomi Wright

Wright’s experimental short film explores the boundary between physical and digital existence through the story of a character who gradually transforms into a digital entity. The film’s visual approach required the progressive conversion of the protagonist from a human form to an increasingly abstract digital representation.

Wright began by filming her actor against a green screen, then used Runway’s 3D tools to generate a series of increasingly abstract digital avatars based on the actor’s appearance. These 3D models were then animated to match the actor’s movements and composited back into the footage, creating a seamless transition from physical to digital that served as a powerful visual metaphor for the film’s themes.

What made “Ephemeral” particularly notable was Wright’s methodical approach to maintaining visual continuity throughout the transformation. By using the same source imagery as a reference point for each stage of the character’s evolution, she achieved a coherent progression that felt like a single continuous transformation rather than a series of disconnected visual effects.

“Terraform” by Studio Panorama

This collaborative short film project explored speculative architectural designs that adapt to environmental changes. The production team used Runway’s text-to-3D capabilities to generate evolving architectural structures based on descriptions of how buildings might respond to different climate conditions.

The resulting film presents a series of architectural simulations that would have been prohibitively expensive to create using traditional 3D modeling workflows. The team was able to generate dozens of architectural variations in the time it would typically take to model a single structure, allowing for a more comprehensive exploration of the film’s conceptual premise.

“Terraform” received recognition at several architecture and film festivals for its innovative approach to visualizing adaptive design. The project demonstrated how AI-generated 3D models can serve not just as visual effects elements but as the central subject of films exploring speculative or conceptual ideas.

“Vestige” by Eliot Kim

Kim’s documentary-narrative hybrid explores archaeological discoveries through a combination of conventional documentary footage and speculative reconstructions of ancient sites. The production faced the challenge of visualizing archaeological locations based on limited physical evidence.

Using Runway’s 3D generation capabilities, Kim created detailed reconstructions of partially excavated structures based on archaeological data and expert descriptions. These models were then integrated into footage of the actual excavation sites, allowing viewers to see the complete structures as they might have appeared originally, superimposed over their current remains.

The project received praise from both filmmaking and archaeological communities for its innovative approach to historical visualization. Kim’s process demonstrated how AI-generated 3D models can serve educational and documentary purposes by making abstract or incomplete information more tangible and comprehensible.

Technical Workflows and Integration Strategies

Successful implementation of Runway’s 3D capabilities typically involves developing structured workflows that integrate with other production tools and processes:

From Concept to Screen: A Typical 3D Workflow

- Conceptualization: Define the visual requirements for 3D elements, often beginning with Midjourney-generated concept images or traditional concept art.

- Initial 3D Generation: Use Runway’s text-to-3D or image-to-3D features to create base models that capture the fundamental characteristics of the desired assets.

- Refinement: Export the initial models to traditional 3D software for detailed adjustments, rigging, animation setup, or other specific modifications.

- Scene Integration: Incorporate the refined 3D assets into your production environment, whether that’s a 3D animation scene, a visual effects composition, or a virtual production setup.

- Rendering and Compositing: Render the final 3D elements and integrate them with live-action footage or other visual components through compositing.

Optimizing Runway Prompts for 3D Generation

The quality of 3D models generated by Runway depends significantly on the specificity and structure of the prompts used. Successful filmmakers have developed prompt engineering techniques specifically for 3D generation:

- Include volumetric descriptions: Specify how the object exists in three-dimensional space, including details about its depth, internal structure, or back side that might not be apparent from a single viewpoint.

- Reference material properties: Describe the physical characteristics of surfaces, including reflectivity, transparency, texture, and how they might respond to light.

- Establish scale relationships: Provide context for the size of objects by relating them to familiar references, which helps the AI generate appropriate proportions and details.

- Define functional aspects: For mechanical or interactive objects, describe how different components relate to each other functionally, which helps generate more coherent internal structures.

Ethical Considerations and Best Practices

As with all AI implementation in creative fields, the use of Runway’s 3D tools raises important ethical considerations:

Attribution and Transparency

Responsible filmmakers are establishing clear standards for disclosing the use of AI-generated 3D assets in their work. This transparency helps audiences understand the creative process while properly acknowledging the technological platforms that enabled it.

Training Data Awareness

Creators should remain mindful that AI systems like Runway are trained on existing 3D datasets, which may include biases or tendencies to reproduce familiar forms. Being aware of these limitations helps filmmakers use the technology more intentionally and potentially counter these biases through careful prompt engineering.

Supplementing Rather Than Replacing Human Creativity

The most compelling implementations of Runway’s 3D capabilities position AI as an extension of human creativity rather than a replacement for it. The technology serves as a collaborative tool that expands what’s possible while still requiring human direction, refinement, and contextual understanding.

Learning Resources and Community Support

For filmmakers interested in exploring Runway’s 3D capabilities, several resources have emerged to support the learning process:

AI Filmmaker Studio (https://www.ai-filmmaker.studio) has established itself as a comprehensive resource for filmmakers looking to incorporate AI tools, including Runway’s 3D features, into their production workflows. The platform offers specialized guidance on:

- Crafting effective prompts specifically for 3D generation in Runway

- Developing integrated workflows that combine Midjourney, Runway, and traditional 3D software

- Technical considerations for preparing AI-generated 3D assets for film production

- Case studies examining successful implementations in independent and commercial productions

Their research-based approach provides filmmakers with both practical techniques and theoretical frameworks for understanding how these emerging technologies can be effectively integrated into creative processes.

The Future of AI-Generated 3D in Filmmaking

As Runway and similar platforms continue to evolve, we can anticipate several developments that will further transform how 3D elements are created and used in film production:

Increasing Fidelity and Control

Future iterations of Runway’s 3D capabilities will likely offer greater fidelity and more precise control over generated assets. This progression will narrow the gap between AI-generated models and those created through traditional methods, potentially reaching a point where the distinction becomes primarily one of process rather than result.

Animation Integration

While current implementations focus primarily on static 3D model generation, we can expect increased capabilities for generating animated 3D assets directly from textual descriptions of movement. This development would address one of the most time-consuming aspects of 3D integration in filmmaking.

Real-Time Generation and Modification

As processing capabilities improve, we may see Runway and similar tools move toward real-time generation and modification of 3D assets. This evolution would enable more interactive creative processes, potentially allowing directors to adjust 3D elements during virtual production sessions rather than requiring pre-generation and preparation.

Personalized Aesthetic Development

As more filmmakers work with these tools, we’ll likely see the emergence of distinctive approaches to prompt engineering and model refinement that reflect individual aesthetic sensibilities. Just as directors develop personal visual styles with traditional filmmaking tools, we’ll see the development of recognizable approaches to AI-generated 3D content.

Conclusion

The integration of Runway ML’s 3D modeling capabilities with filmmaking workflows represents a fundamental shift in how three-dimensional content is conceptualized and created for screen media. By dramatically reducing the technical barriers to 3D asset generation, these tools are democratizing access to visual techniques that were previously available only to productions with substantial resources and specialized teams.

The most innovative implementations of these technologies don’t simply use AI as a cost-saving measure, but rather as a means of expanding creative possibilities, achieving visual expressions that would otherwise be unattainable, and allocating human creativity more intentionally throughout the production process.

As these tools continue to evolve and creative methodologies mature, we can anticipate an exciting period of experimentation and innovation in how three-dimensional elements are integrated into film narratives. For filmmakers looking to explore these possibilities, resources like AI Filmmaker Studio provide valuable guidance in navigating this rapidly evolving landscape, offering both technical instruction and creative inspiration for those ready to embrace the future of AI-enhanced 3D production.

Discover more from AI Film Studio

Subscribe to get the latest posts sent to your email.